Best Of

🎓Academy Reimagination 2025 Highlights

Board Academy 2025: A Year of Transformation — And What’s Next for 2026

In 2025, Board Academy embarked on an exciting journey to reimagine the learner experience - guided by one bold vision: Delivering the Right Education, in the Right Way, at the Right Time. . .Every Time!

This transformation is shaped by you, and this is just the beginning! Reimaging Academy is not a one-time initiative; it’s an ongoing commitment. We’re continuously improving the experience and our offerings to keep learning relevant, engaging, and effective.

In the interactive video below, you’ll discover:

- What we accomplished in 2025

- What’s launching this quarter

- What’s coming in 2026

- And how we’re turning our promises into action

🎥 Watch the video and let us know what excites you most!

Re: Dynamic Header on Dataview

Hi Akrem,

Ahhh. I tried that one. The if condition with zero value. But, the difference is I dont set the "By Column". So, the all column on datablock still be shown but when the condition zero is triggered, the column on that datablock will show zero value without hiding. Okay, let's try it. Thanks a lot.

Putra

Putra

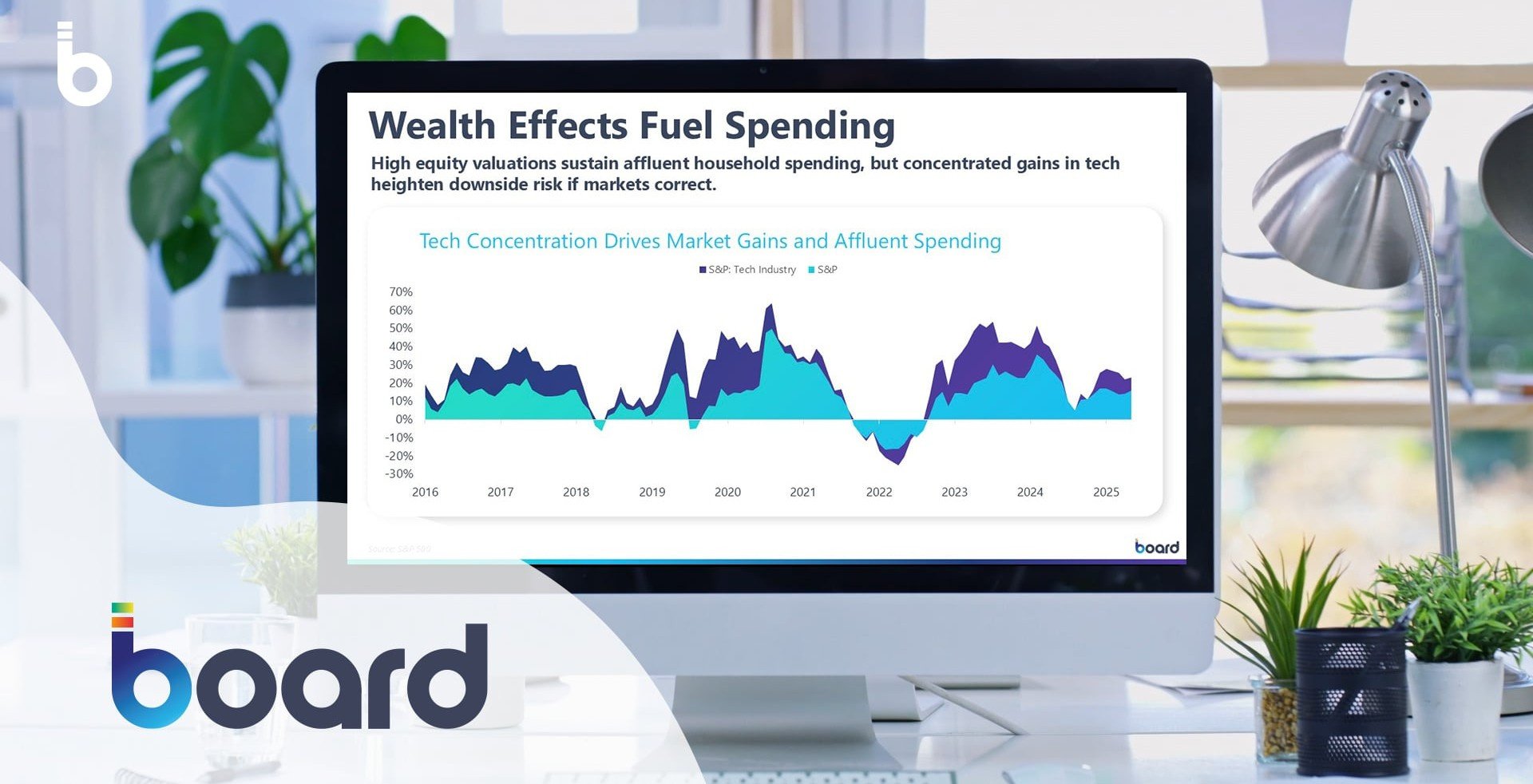

US Economic Outlook: February 2026

As the U.S. economy moves toward 2026, consumer spending growth is increasingly concentrated among high-income households. Board’s February Economic Outlook explores how this K-shaped dynamic reshapes risk, volatility, and planning assumptions for business leaders.

Rather than broad-based income gains, today’s expansion is being driven by AI-fueled asset appreciation—creating momentum, but also vulnerability if markets correct.

In this outlook, you’ll learn:

- Who is driving consumer spending growth today

- Why wealth effects matter more than wages

- Where the biggest downside risks lie for 2026

- Which indicators leaders should monitor closely

Why this matters:

Concentrated growth requires more agile, data-driven planning—and Board helps organizations turn economic signals into confident decisions.

You might also like

LEARN! GROW! ACHIEVE! We are happy to share some exciting updates and launches in Academy.

How to ensure correct rule behavior when using entity sorting

Introducing the New Board Idea Exchange

Monthly Customer News Update - February

The Innovation Edition

From Emerging Ideas to Real-World Impact

Innovation only matters when it changes decisions. This month’sedition looks at how AI, predictive intelligence, and unified planning arealready shaping how organizations plan, adapt, and execute, at every level.

- Agentic AI and the evolving CFO role

- Board Agents in real planning workflows

- AMMEGA’s 20% time savings

- The Boardroom News - Where strategy meets execution

- The new Board Academy experience

February is all about innovation that actually shows up in day-to-day decisions. I hope this edition gives you a few practical ideas you can apply immediately, and a glimpse of where planning is heading next.

Warm regards,

Sandra

Head of Customer Marketing

Inspire

Where Planning is Heading

Why Agentic AI will redefine the CFO’s Role

AI is moving beyond automation. Agentic AI actively supports decisions, reshaping how finance leaders guide performance, manage risk, and orchestrate planning across the business.

Economic Outlook: The Risk Behind 2026 Growth

Nearly two-thirds of consumer spending growth now comes from the top 20% of households, creating a concentration risk that could reverse quickly if asset markets correct. Board’s Principal Economist unpacks what this means for stability, volatility, and the decisions leaders need to prepare for now.

New: The Boardroom—Where Strategy meets Execution

In February, we launched The Boardroom, our new monthly LinkedIn newsletter exploring the ideas and design choices shaping modern enterprise planning. Each edition focuses on one theme, starting with agentic AI and how it’s changing real decision-making across finance and operations.

APPLY

Innovation in Practice

Webinar: Board Agents in Action

See how agentic AI works inside Board. This live session shows how Board Agents help teams move from insight to execution with speed and confidence.

AMMEGA saves 20% Time through Unified Planning

Sometimes innovation is more than just technology. It’s a quiet shift in how planning works. With Board, AMMEGA didn’t just save time. It changed how decisions are made across the business, cutting data preparation effort by 20% and enabling more confident, forward-looking analysis.

EXPLORE

Industry Insight

Retail Merchandise Planning Report | Coresight x Board

How leading retailers are modernizing merchandise planning with predictive insights and integrated decision-making.Need more? Here's an interesting read from our colleague Matt Hopkins you sure don't want to miss.

ENABLE

Build Capability Faster

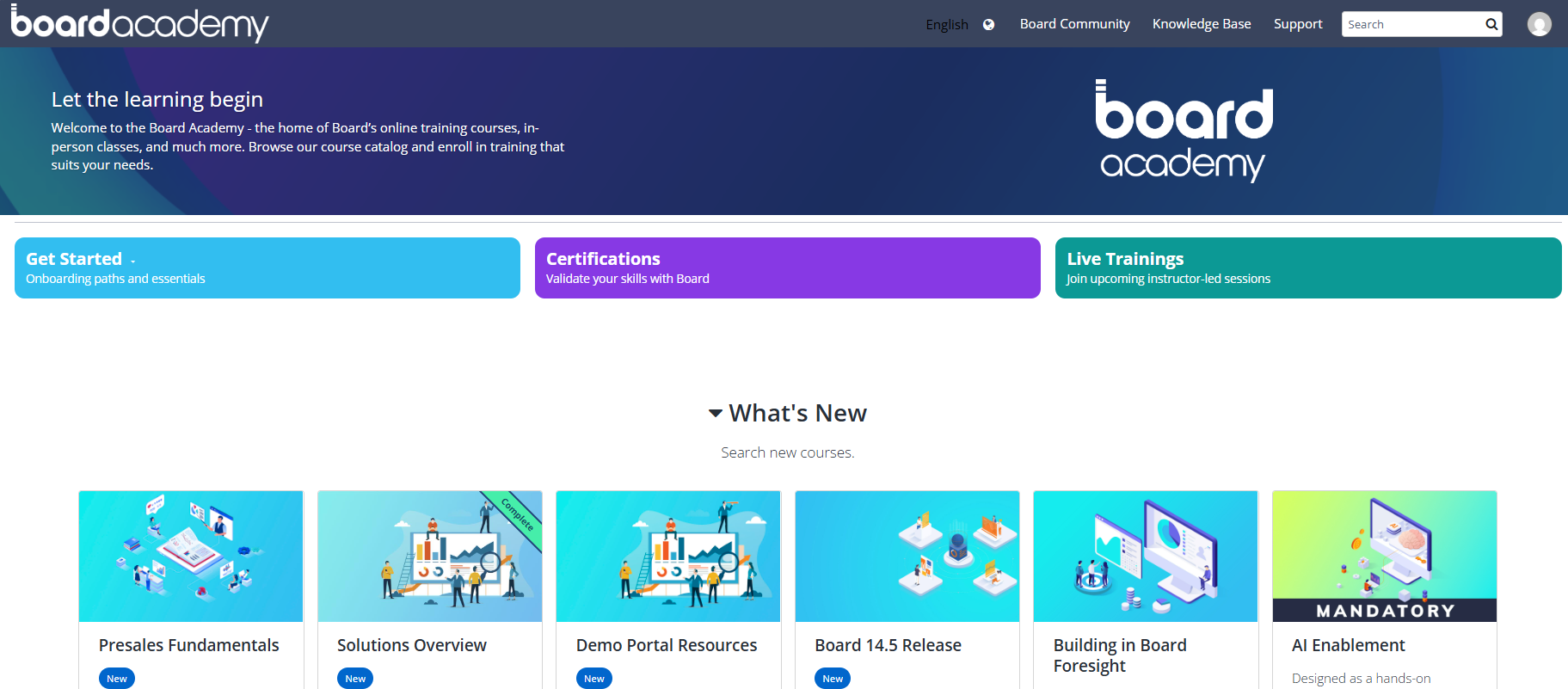

Discover the New Board Academy Interface

The new Board Academy interface makes it easier to build skills as planning changes, with clearer navigation, role-based learning paths, and faster access to certifications.

A new Way to shape What comes next

The new Board Idea Exchange gives you a direct way to share ideas, vote on improvements, and influence the future of the platform. It creates a more open, collaborative space where feedback turns into action and innovation is shaped together with the community.

How-To Guide: How to map Entities using Text Cubes

See how text cubes can be used to map entities in Board, enabling more flexible and maintainable relationships without restructuring your model.

Webinar: Bridging HR and Finance Planning

Workforce costs are a major driver of performance, yet HR and Finance often plan separately. In this session with EY and Board customer Telegraph Media Group, learn how connecting HR data with financial planning improves cost visibility, forecasting accuracy, and decision-making.

CONNECT

Join the Community

Board Beyond Events

Innovation doesn’t land in isolation. Board Beyond brings together customers, partners, and experts to see how new ideas are applied in real planning environments, through in-person and virtual sessions focused on what actually works.

Innovation isn’t an announcement. It’s what you do next.

Thanks for building it with us.

Re: Breakpoints for a regular User

Hi @Robert Barkaway , thanks for the feedback. It does not seem a normal behavior, as with a Lite license it should be ignored, but I suspected this kind of behavior as indeed the debugger is interactive.

From what I see in the diagnostic log, the scheduler does not ignore the debug even with a Lite license, and sends to the client, which fails and exit.

Etienne

Re: different number types and Totals

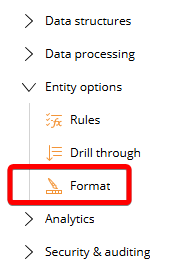

@Maik Burghardt, for the decimals and suffix, I would use the Format (if you are using an Entity by row in your screenshot).

After creating the Format, head to the DataView panel on the Screen, and attach the Format/Template to your DataView.

For the Share, depending on your calculation, you might be able to Use a Rule, and Apply on Totals.

Re: Dynamic Header on Dataview

Hi Akrem,

Thanks for the insight, really appreciate it. Actually, we did the way you told. The value is on point, but we have to find the name of the header.

Putra

Putra

Introducing The Boardroom!

Welcome to the Boardroom, Board's inaugural monthly LinkedIn newsletter which explores how finance, supply chain, merchandising, and operations leaders move from static cycles to continuous, connected enterprise planning.

The first edition dives into a shift reshaping the space: enterprise-ready agentic AI, and how Board Agents bring it to life.

Be sure to subscribe to the Boardroom today to get valuable monthly insights and keep an eye out for the March newsletter when the topic will be AI-driven finance transformation and how to turn agility into advantage.

Don't forget to also register for our 60-minute webinar on February 25 when you’ll see how Board Agents are enabling the Office of Finance to shift from static planning cycles to live, self-adjusting systems—powered by trusted data, intelligent agents, and human oversight.

As always, we welcome your feedback in the comments below or in LinkedIn.

Re: Level 200: Module 207 Pressing button "Apply 5% increase" empties column CY budget

Info: I looked again at Level 200: Module 207, Step 1.3: Nothing there about setting PY… (I use the german version).

🎓LEARN! GROW! ACHIEVE! We are happy to share some exciting updates and launches in Academy.

📚New Courses launched this month:

Solutions Overview

About this course:

This course introduces Board’s Solutions strategy and explains why it is critical to delivering faster, more consistent customer value. You learned how Board has evolved from a flexible platform to a solutions-driven approach, how Engineering, Platform, and Solutions work together to drive innovation, and how Solutions help Board teams engage customers around business outcomes rather than technology alone.

👥Target audience: Internal Employees and Partners.

Presales Fundamentals

About this course:

This new learning program is designed to strengthen presales capabilities across our teams and partners through two focused learning paths — Presales Foundations and Presales Soft Skills. Together, these paths build the core knowledge and interpersonal skills required to succeed in today’s sales and presales environment, ensuring participants are confident, prepared, and aligned to deliver high-impact customer engagements and effectively support opportunities throughout the sales cycle.

👥Target audience: New and existing employees and partners are encouraged to explore these courses.

Demo Portal Resources

About this course:

Your central hub for accessing demo materials, curated assets, and active demo environments designed to support your success. This space brings together everything you need to confidently prepare, customize, and deliver impactful demonstrations, all in one convenient location. Whether you’re exploring available environments, downloading the latest assets, or looking for guidance to enhance your demo experience, the Demo Portal Resources provide streamlined access to the tools and information that helps you stay ready and aligned for every opportunity.

👥Target audience: New and existing employees and partners are encouraged to explore these courses.

Instructor-led Training

About these offerings:

Whether you're beginning your journey or looking to sharpen advanced skills, we provide learning opportunities designed to help you grow and succeed in your role.

Our programs are delivered in flexible formats to suit your needs, including self-paced eLearning and engaging instructor-led sessions — available in-person or virtually through Board Academy – Live Trainings. Explore the available courses and enrol in a format that works best for you to continue building your expertise and accelerating your professional growth.

👥Target audience: New and existing employees and partners.

Click the below button to explore live trainings on Academy:

COMING SOON!

Introduction to Board: Analysis and Reporting

About this course:

This new course covers the same material as the Level 100: Foundations of Building in Board and is designed for new Board developers, power users, and anyone eager to explore Board’s front-end design. The content has been updated to be even more effective and engaging for learners from the original written video lessons in the Level 100 course and now features a fictional company, Northwind Traders, and its numerous fictional employees. This self-paced training will allow the audience to truly immerse themselves as they participate in hands-on learning where they will replicate each step in a virtual machine. No technical background is needed—just curiosity and a willingness to explore.

This course is currently in UAT (User Acceptance Testing) and will be available soon with translation features.

Target audience: Board developers, power users, and anyone eager to explore Board’s front-end design.

Support Training Course

About this course:

A learning path designed to onboard internal Support employees that features introductions to support roles and responsibilities, guidance on the troubleshooting process, and walkthroughs of internal tools such as Salesforce Service Cloud.

Target audience: Internal Board employees on the support team.

We have more things coming your way from the Academy. Explore more enablement and learning resources at Board Academy.